A brief history of dependency management

When I started programming in the late 80’s, dependency management definitely wasn’t on my mind. I mostly used BASIC and while my beloved C64 had a thing called BASIC extensions (like the famous SIMONS BASIC) which worked a little like libraries, for most of them there was no obvious way of bundling them together with your program.

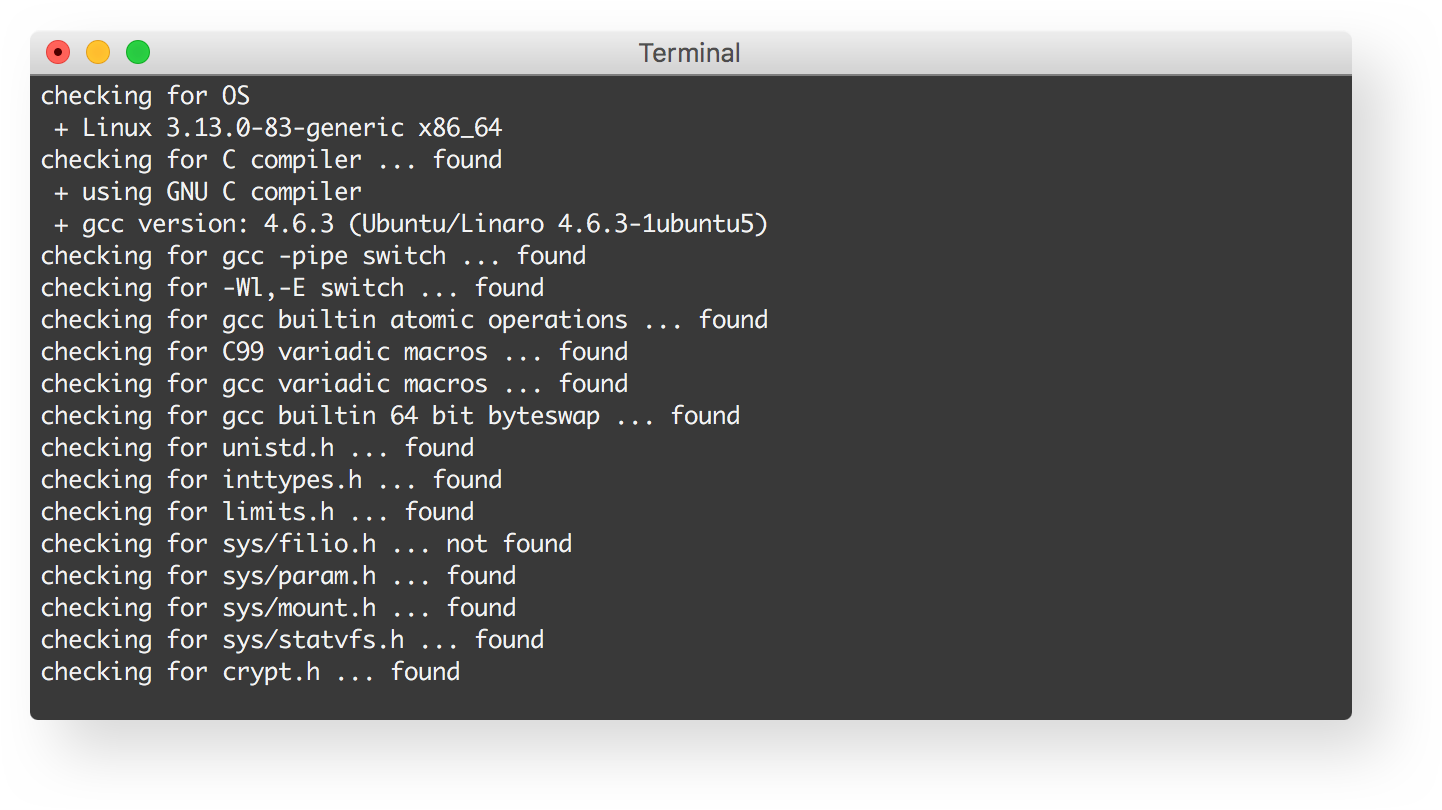

In 1991, when I was still happily coding BASIC on my C64, a guy named David Mackenzie started to work on a program that, I guess, sends shivers down the spine of several generations of C coders: autoconf. It is, today, a complex and unwieldy beast, but together with Larry Wall’s metaconf, it might actually be one of the first approaches of managing dependencies.

Step one: Define your dependencies and ensure they are available at build time.

Now, in contrast to, say, Ruby’s Bundler, autoconf does something interesting: Instead of checking for version numbers of dependencies, it checks for features, or abilities. This makes sense in the environments autoconf was supposed to work in, getting software to compile on vastly different systems like OpenBSD, SINIX or, a few years later, Linux. Of course, it’s much more complicated to build checks for abilities than for version numbers.

A few years forward, Linux distributions started to popularize the idea of “package managers”, the idea being that you could install programs and their dependencies without manually compiling all of them. Popular examples are dpkg/apt from Debian/Ubuntu and RPM from Red Hat. While there’s a difference between these system level package managers and language level ones, they share a lot of concepts. For one, they both need package repositories, or, at least, package indexes that point to a place where one would get a package. Also, package definitions contain metadata about the direct dependencies of that package so that the package manager can resolve these.

Step two: quickly find and install your dependencies.

One of the first package managers and package repositories specific to one programming language is CPAN for Perl which fully started in 1995. While CPAN (especially for people who didn’t grow up in Perl-Land) is kind of tricky to use and navigate, it definitely started some sort of movement. In 1999 PHP got PEAR which never really got the traction it deserved, which is a bit strange. To date, PEAR only contains about 600 packages, in contrast to CPAN that carries more than 170k Perl modules. A few years later, around 2003, Python finally gets something similar called PyPI (currently carrying over 97k packages). In 2004, Rubygems are born, back in the days as a part of rubyforge.org, a now defunct source hosting website. Rubygems.org, the successor, now carries over 128k packages.

The latest additions to this zoo would be npm (for node.js and, by now, JavaScript in general), with over 400k packages, NuGet for Windows/dotNet development at over 72k packages, CocoaPods for iOS and Mac development, now at 27k packages, Composer (tool) and Packagist (repository) for PHP which took over PEAR easily and are now at over 125k packages and last but not least Cargo, a package manager for Rust, a relatively new language that already has over 7k packages on crates.io.

So, dependency management is solved with all of these solutions, right?

Well, sorta, kinda. So far, we’ve talked about easily installing libraries and their dependencies, but how do you manage dependencies when building an application? In some systems, like npm, applications and libraries are treated the same. At the core, you have the package.json, that, among other things, defines your direct dependencies.

Rubygems, on the other hand, doesn’t give you a simple way of installing all the dependencies specified in a local gemspec (a metadata file used by Rubygems to, among other things, define the name, version, included files and also, dependencies of a gem). This sounds like an oversight, but most older package managers work the same way, so I guess it just didn’t occur to the people building Rubygems that this would be a useful feature.

There’s also another difference between application dependencies and library dependencies. When defining library dependencies, you want to make sure your dependencies are specified as loosely as technically possible to keep the scenario of dependency conflicts as small as possible. This is more important in a language like Ruby, that, for technical reasons, can only load one version of any given gem at a time - npm, in contrast, can load different versions of the same library at the same time and inject them according to the dependency tree. That’s why npm libraries often run a much more tight ship regarding the versions of their dependencies they support.

When building and deploying an application, you want the opposite: You want a defined set of dependencies that are known to work well together and you want to be able to reproduce this every time. Switching to a newer version of any given dependency should be a deliberate step and not just happen “at random” on a new installation.

Step three: lock your dependencies

The old approach to this has been to “vendor” your dependencies, which means that you extract them into your application source tree and check them in into your version control. Of course, that’s wasteful, inelegant and potentially makes updates a lot harder.

Pip, the 2004 replacement for Python’s easy_install, introduced a concept called requirements.txt, which could be used to specify a fixed set of needed libraries and versions. Pip would also generate these from the current set of installed libraries. This approach sort of works but has it’s drawbacks: The dependency loader in Python is not aware of that file, so regardless of what’s in that file, when you’ve installed an additional library, it will be available for the module loader. So you tend to forget to update your requirements.txt. Also, to make this work, you need to use separate environments for each application (otherwise dependencies would leak between apps), something that’s made easy by virtualenv.

Along comes Yehuda Katz’ Bundler in 2010 and introduces the Gemfile and Gemfile.lock. While the way of specifying dependencies in Gemfiles looks surprisingly similar to that in gemspecs, there are some important differences. A Gemfile specifies a definite source for your gems, for example. Also, you can specify, for those last minute bug fixes, gems that get pulled from git repos instead of from Rubygems.

And then, there’s the Gemfile.lock which snapshots all versions of your current dependencies. If you check that into your version control, your new colleague can simply check it out, run bundle install and he will have the exact same set of gems running in the application as you.

Most “modern” package managers like cargo, composer and cocoapods feature this lockfile approach. Npm does, too, in theory, but for many, npm shrinkwrap either doesn’t work or seems overly complex. This was one of the reasons why Yarn was born, an alternative frontend to npm which introduces a proper, working lockfile.

And, finally, Kenneth Reitz, mostly known for his Python Requests package, is currently working on Pipenv, which seems to be a clever way of combining pip and virtualenv to bring a Bundler like approach to Python as well.

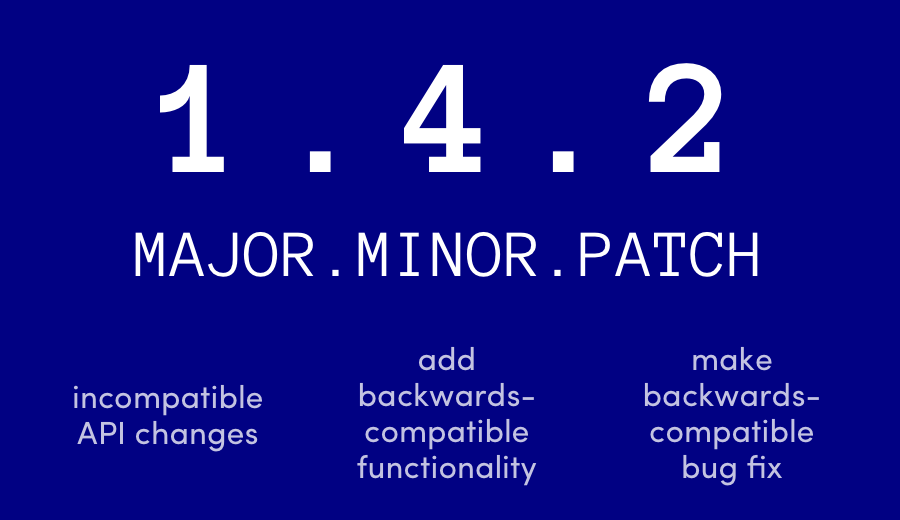

Step Four: Embrace Semantic Versioning

One thing I’ve left out so far is how different package managers let you specify version numbers, so let’s quickly dive into that. Before Semantic Versioning became a thing, behavior over a number of versions was basically undefined. It was good practice to not hide breaking changes in patch versions (the z in x.y.z), but it was more of a guideline than anything else.

Modern package managers all work very well with SemVer. Rubygems and Bundler support the twiddle-wakka, pip allows you to restrict version ranges and npm, being the new kid on the block, even has a semantic versioning operator, the caret, that lets you specify a minimum version number and all versions that should be API compatible in one go (“^1.2.3” basically means >= 1.2.3 and < 2.0.0).

This is important because it makes updating dependencies a lot easier. By specifying SemVer compatible versions in your Gemfile, for example, you can, in theory, upgrade your dependencies without fear of breaking things. Only major upgrades will require you to actually change the Gemfile. Of course, in reality, breaking changes in minor or patch versions still occur because humans make mistakes and not every project is commited to SemVer (most prominent exception, in Rubyland, unfortunately, is Rails which promises API compatibility only on minor versions), which is why you should always thoroughly test after upgrading.

Step Five: Profit?

In our last blog post, we already encouraged you to support Ruby Together, the non profit that supports the work on Bundler and RubyGems. Additionally, RubyCentral, a non profit mostly known for running both RubyConf and RailsConf, runs the RubyGems infrastructure. But how do other languages solve the problem of running infrastructure for an open source project? Python has the Python Foundation and also relies on sponsors to run large parts of the infrastructure. NuGet is run by the .NET foundation, so this seems to be a similar model. CPAN, on the other hand seems to rely on a less centralized structure as there are hundreds of mirrors run by volunteers and sponsors.

Then, a new model popped up, first tried out by npm, which is run as a for-profit, VC backed company that, besides hosting all the open source packages, provides paying customers with the possibility of hosting private packages. PHP’s packagist runs a similar model, but in contrast to npm the for-profit product and the open source variant are clearly separated. It will be interesting to see how this model plays out. Especially the introduction of VC money makes me a bit nervous, but I guess if the companies go belly-up, there’s always a strong incentive of the community to somehow rescue the thing (and both npm and packagist are largely open source). There’s also the Node.js foundation which might be able to take over if necessary.

All in all, in the last 25 years or so, dependency management has improved a lot. It is still a common point of frustration, especially if things break, but at least, in 2017, we all have pretty good ways to reliably and reproducibly install our dependencies, which is nothing to be frowned upon, if you think about it.

Jan Krutisch, Co-Founder at Depfu

Jan Krutisch, Co-Founder at Depfu